Last week, I picked up an iPhone 4s and got a chance to try out its premiere feature, Siri. Siri is a digital assistant that lets you talk to your phone to get things done. You can turn the phone on, bring it up to your ear, and ask Siri for driving directions, to add reminders, find restaurants, play music, schedule meetings and more.

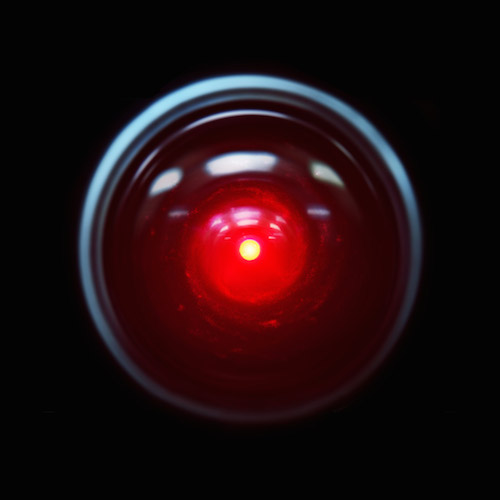

When I first heard about this, like many others, I wasn’t impressed. Why would I want to talk to a phone? The UI on an iPhone is efficient and, as a techie, I’m pretty good at using it. Even though we’ve seen it predicted in all sorts of Sci-Fi movies, from Scotty in Star Trek to Dr. Bowman in 2001: A Space Odyssey, I was still not sure why talking would be preferable over other interfaces.

After using Siri for a week, I get it. Siri is a glimpse of the future.

In this post, I’ll tell you why that’s a very good thing.

It’s not voice recognition

First, let’s get something out of the way: Siri is not just voice recognition. Voice recognition by itself is nothing new and has been available for a while, even on phones. The important thing to understand is that voice recognition is merely the first step of what Siri does. It’s what happens after your voice has been transcribed that’s new and exciting.

Siri does a remarkably good job of natural language processing. In other words, it actually tries to understand human speech.

Before Siri, almost all voice recognition systems only worked if you used a very specific set of keywords and phrases—incantations, really. With Siri, you can speak more or less naturally and it (she?) does a remarkable job of understanding you. You don’t need to read a manual or cheat sheet to use Siri: just start talking and you’d be amazed at how it seems to just work. It’s certainly Clarke’s third law at play, but at times, Siri seems truly magical.

Context

Not only does Siri understand normal human speech, it’s also aware of the context around the conversation. For example, Siri is aware of both your location and where you live, so you can say:

Remind me to water the plants when I get home

As you pull into your driveway, the reminder will go off. Or, you can try:

Do I need an umbrella this weekend?

And Siri will check the forecast in your area, see that it’s 70 and sunny, and say “no”. Siri also remembers the previous things you’ve said, so you can have a whole conversation with your phone:

- What pizza places are around here?

- I found a number of pizza restaurants fairly close to you. (a list comes up with Yelp reviews)

- What about burgers?

- I found 20 burger restaurants fairly close to you. (another list from Yelp)

- What’s the best one?

- I found 20 burger restaurants fairly close to you. I’ve sorted them by rating.

Natural language processing + context + apps = some amazing possibilities that go far beyond the “voice recognition” you’ve seen before.

Efficiency

At first, I thought Siri would only be useful when my hands are busy, such as while driving and never expected to talk to my phone otherwise. As it turns out, talking to Siri can be a huge time saver in all situations. For example, imagine I want to set an alarm that will go off at 9am every weekday. Here are my two options:

Option 1: touchscreen UI

- Push the power button

- Slide to unlock

- Swipe through app screens to the clock app and tap to open it

- Tap the alarms tab

- Click the plus (+) to create a new alarm

- Use the spinners to set the time to 9am

- Tap the repeat menu

- Tap each of the weekdays individually

- Tap save

- Done.

Option 2: Siri

- Push the power button

- Hold the phone up to my ear and say “wake me up at 9am every weekday”

- Done.

We’re talking an order of magnitude faster. Similar time savings can be observed when getting directions (“how do I get home?”), scheduling meetings (“setup a meeting with Jon for 9am tomorrow about mobile strategy”) and many other tasks. The ability to skip dozens of menus and huge amounts of text entry is a huge boost in efficiency.

And here’s the kicker: the iPhone touchscreen UI is built incredibly well. It’s arguably one of the most intuitive and efficient UI’s available. Despite that, it’s still orders of magnitude slower than Siri.

Accessibility

Speed and efficiency are great, but accessibility is why Siri is truly a glimpse of the future. Although the iPhone touchscreen UI is intuitive and beautiful, it’s still something that must be explored, experimented with, and learned. I’m a “power user”, so I find it easy; the average person, even with a best-of-class interface, will find it harder.

The beauty of Siri is that it makes all of the functionality of a high tech smartphone available to everyone. Even the least tech savvy person knows how to talk, give commands, and ask questions. As Siri progresses, the bar for using the state-of-the-art will get lower and lower.

And after all, wasn’t that always Steve Jobs’ goal? Wasn’t he always striving towards technology that was accessible to anyone, with no training and no confusion? Siri is a small step in that direction: pick up your phone and, in plain English, tell it what you want.

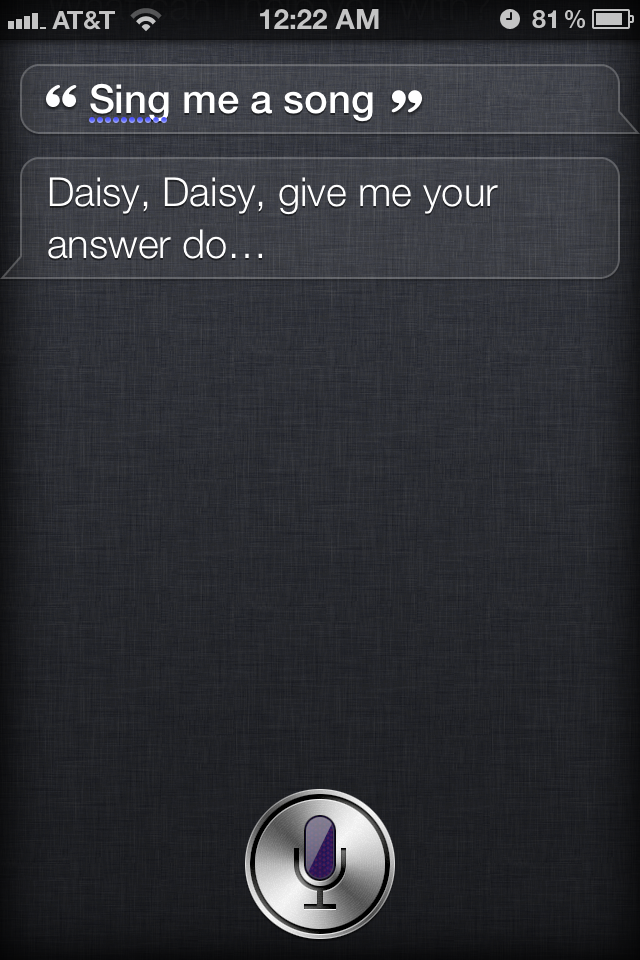

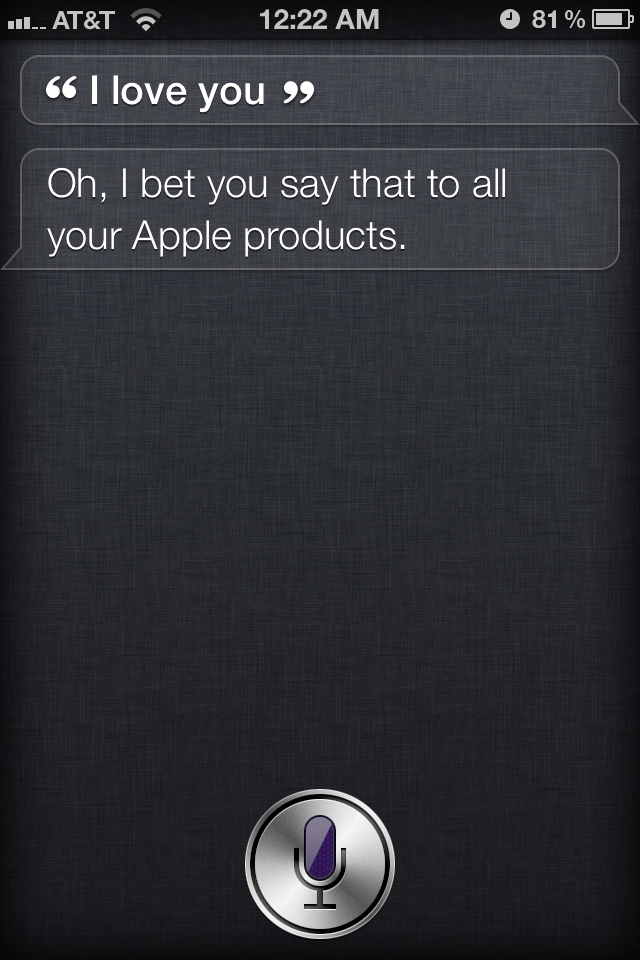

Personality

Finally, Siri has a personality. She can even be a bit cheeky.

There’s an entire site dedicated to Siri’s clever responses. But these are more than just easter eggs. They bring Siri - and your iPhone - to life. The iPhone has always done spectacularly well in terms of customer satisfaction; giving the phone a personality will make people love it. Siri will become assistant, friend, and even a part of you - we are cyborgs, after all. I suspect that after Siri, you’ll never be able to go back to a phone without it.

Just the beginning

Now, let’s not get ahead of ourselves. Siri is just the very first step. Actually, that’s not entirely accurate: Siri is yet another step in a long continuum of making computers ever more accessible. From punch cards, to the command line, to the mouse & keyboard, to touch screens, and now, natural language processing. There is still a great deal of work to do, but give Siri a try now to see where things are headed.

In the near future, I expect Siri to be integrated with countless more apps. For example, I’d love to see Shazaam (“what song is this?”), New York Times (“what’s happening in the world?”), shopping apps (“is this a good deal?”), and social networking apps (“who is this guy?”). I wouldn’t be surprised to see some element of “learning” added as well, allowing Siri to become more personalized by using more info about you and your contacts (e.g. via Facebook, Twitter and LinkedIn integration), getting better with accents and mannerisms of speaking, remembering previous conversations you’ve had and… who knows what else.

Herman van der Veer

If you enjoyed this post, you may also like my books, Hello, Startup and Terraform: Up & Running. If you need help with DevOps or infrastructure, reach out to me at Gruntwork.